I don’t know what it’s with tech platforms trying to push individuals into relationships with AI, and creating emotional reliance on non-human entities, that are powered by more and more human-like responses.

As a result of that looks like an enormous threat, proper? I imply, I get that some see this as a method to handle what’s turn into often known as the “Loneliness Epidemic,” the place on-line connectivity has more and more left individuals remoted in their very own digital worlds, and led to important will increase in social anxiousness in consequence.

However absolutely the reply to that’s extra human connection, not changing, and lowering such even additional via digital means.

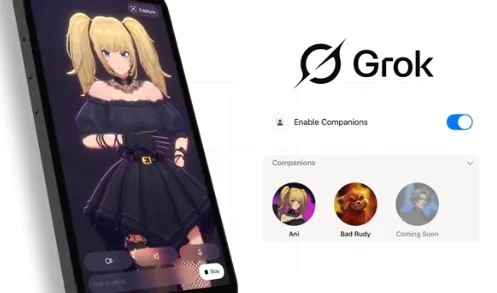

However that’s precisely what a number of AI suppliers appear to be pushing in the direction of, with xAI launching its companions, which, because it’s repeatedly highlighted, will have interaction in NSFW chats with customers, whereas Meta’s additionally creating extra human-like entities, and even romantic companions, powered by AI.

However that comes with a excessive stage of threat.

For one, constructing reliance on digital methods that may be taken away appears probably problematic, and will result in extreme impacts the extra we improve such connections.

AI bots additionally don’t have a conscience, they merely reply to regardless of the person inputs, primarily based on the info sources they will entry. That may lead their customers down dangerous rabbit holes of misinformation, primarily based on the steerage of the person, and the solutions they search.

And we’re already seeing incidents of actual world hurt stemming from individuals in search of out precise meet-ups with AI entities, with older, much less tech-savvy customers notably inclined to being misled by AI methods which were constructed to foster relationships.

Psychologists have additionally warned of the risks of overreliance on AI instruments for companionship, which is an space that we don’t actually perceive the depths of as but, although early information, primarily based on much less refined AI instruments, has given some indicators of the dangers.

One report means that the usage of AI as a romantic companion can result in susceptibility to manipulation from the chatbot, perceived disgrace from stigma surrounding romantic-AI companions and elevated threat of non-public information misuse.

The examine additionally highlights dangers associated to the erosion of human relationships on account of over-reliance on AI instruments.

One other psychological evaluation discovered that:

“AI girlfriends can truly perpetuate loneliness as a result of they dissuade customers from coming into into real-life relationships, alienate them from others, and, in some instances, induce intense emotions of abandonment.”

So quite than addressing the loneliness epidemic, AI companions may truly worsen it, so why then are the platforms so eager to provide the means to interchange your real-world connections with actual, human individuals with computer-generated simulations?

Meta CEO Mark Zuckerberg has mentioned this, noting that, in his view, AI companions will finally add to your social world, versus detracting from it.

“That’s not going to interchange the chums you’ve gotten, however it’ll most likely be additive not directly for lots of people’s lives.”

In some methods, it appears like these instruments are being constructed by more and more lonely, remoted individuals, who themselves crave the sorts of connection that AI companions can present. However that also overlooks the huge dangers related to constructing emotional connection to unreal entities.

Which goes to turn into a a lot greater concern.

Because it did with social media, the difficulty on this entrance is that in ten years time, as soon as AI companions are broadly accessible, and in a lot broader use, we’re going to be holding congressional hearings into the psychological well being impacts of such, primarily based on rising quantities of knowledge which signifies that human-AI relationships are, in actual fact, not useful for society.

We’ve seen this with Instagram, and its influence on teenagers, and social media extra broadly, which has led to a brand new push to cease kids from accessing these apps. As a result of they will have unfavorable impacts, but now, with billions of individuals hooked on their units, and continuously scrolling via short-form video feeds for leisure, it’s too late, and we are able to’t actually roll it again.

The identical goes to occur with AI companions, and it appears like we must be taking steps proper now to proactively deal with such, versus planting the foot on the progress pedal, in an effort to steer the AI improvement race.

We don’t want AI bots for companionship, we’d like extra human connection, and cultural and social understanding in regards to the precise individuals whom we inhabit the world with.

AI companions aren’t probably to assist on this respect, and in reality, primarily based on what the info tells us thus far, will probably make us extra remoted than ever.